15 Technology Trends 2025: Expert Predictions You Can’t Ignore

Technology trends 2025 will fundamentally reshape how businesses operate and compete in an increasingly digital world. Are you prepared for what’s coming?

The next wave of innovation won’t just be incremental improvements—it will feature breakthrough advances in generative AI models, significant cybersecurity advances, and transformative emerging tech. Across 2025 developments, understanding the critical interplay between these fields gives decision-makers a strong competitive edge.

This comprehensive guide examines 15 critical technology developments that experts predict will dominate the landscape through 2025 and beyond. From quantum computing to blockchain-driven digital identity, agentic AI, and application-specific semiconductors, these aren’t short-term fads—they represent powerful, lasting business transformation drivers.

1. Agentic AI

Agentic AI definition

Agentic AI describes autonomous AI systems that can perceive, reason, act, and learn with limited oversight. These systems exhibit goal-driven behavior, adapting to changing environments while making independent decisions. Furthermore, unlike traditional AI models that primarily optimize toward specific objectives rather than serving direct goals, agentic AI systems can pursue specific tasks with minimal human intervention.

Agentic AI use cases

Organizations are implementing agentic AI across multiple domains. In finance, agents monitor financial markets and adjust investments. In healthcare, providers use agentive systems to analyze patient data and recommend treatments. Additionally, supply chain organizations implement autonomous 5G/4G software agents for inventory management, predictive logistics, and forecasting, reducing huge customer inefficiencies.

Agentic AI enterprise adoption

Despite massive AI investments, only 14% of senior leaders report full implementation for agentic AI systems. Yet, 70% of surveyed executives acknowledged experimenting with agentic AI, signaling a rise in core capacity. Common adopter barriers include trust, governance, and explainability. By investing in trust frameworks, risk mitigation, and transparent design methodologies, organizations implementing agentic AI report significant benefits, with 66% noting efficiency improvements and 57% realizing cost savings.

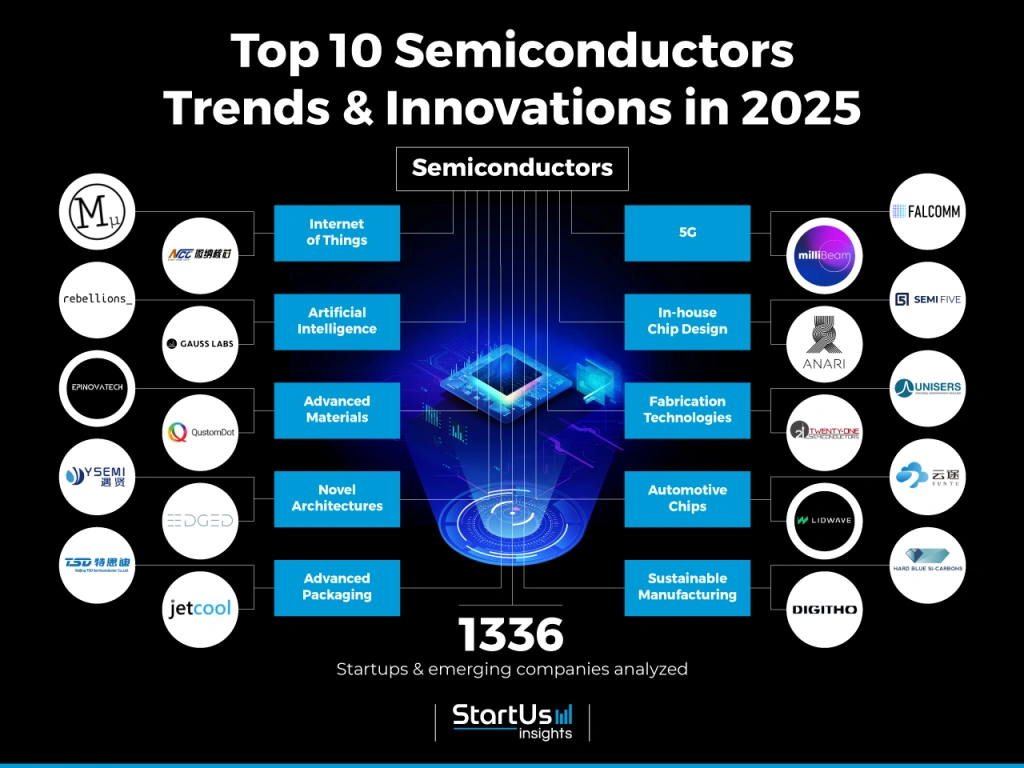

2. Application-Specific Semiconductors

Semiconductor innovation

The industry is shifting toward highly specialized chips optimized for specific functions. Custom silicon constitutes nearly 25% of AI accelerators by 2025, with companies tailoring processes to include memory interfaces, high bandwidth memory (HBM) customization, and reconfigurable data paths. This specialization increases efficiency by 35%, reducing total cost of ownership for large facilities by nearly 45%.

AI performance benefits

Graphics Processing Units (GPUs) and Application-Specific Integrated Circuits (ASICs) deliver unmatched performance for specialized AI workloads. Unlike general-purpose processors, these custom chips prioritize throughput and energy efficiency for specific tasks. This specialization is critical as AI adoption spreads, with demand forecast to grow by 44.5% annually. Consequently, future systems will contain hundreds of accelerators grouped across several racks.

Investment in semiconductors

The application-specific semiconductor industry invested 17.7% of revenue into R&D in 2024, totaling $62.70 billion. Forecasts project global demand will exceed $627 billion in 2025, with projections of $857 billion for 2028. Specifically, the generative AI chip market alone was worth over $125 billion in 2024, representing 25.7% of total chip sales.

3. Cloud and Edge Computing

Cloud-edge architecture

The evolution of cloud computing is shifting toward a complex blend of centralized and distributed systems as we enter 2025. This distributed approach is establishing itself as a critical technology trend for organizations seeking to balance performance with regulatory requirements.

Latency and data sovereignty

By 2025, global data is expected to reach 200 zettabytes, with 50% stored in the cloud. This massive growth drives demand for real-time processing that traditional batch approaches cannot satisfy. Different edge deployment levels offer varying latency reductions, from several seconds (regional edge) to 15–20ms at aggregation points. Simultaneously, data sovereignty concerns are dramatically reshaping cloud strategies, as organizations confront complex regulations determining where data resides and which jurisdictions govern it.

Cloud security implications

The distributed nature of edge computing introduces significant security challenges as organizations are shifting from centralized perimeter-based security to distributed “security-mesh” models requiring DevSecOps integration. The hierarchy within networked edge computing acts as a double-edged sword—distributing data between nodes provides resilience against localized outages but expands the attack surface. Approaches like Zero Trust, micro-segmentation, and multi-factor authentication are fundamental to cloud security frameworks.

4. Quantum Technologies

Quantum computing basics

Unlike classical computers that use bits (0s and 1s), quantum computers leverage qubits which can exist in multiple states simultaneously through superposition. This fundamental property enables quantum computers to explore many computational paths in parallel. Moreover, qubits can be entangled, advancing time to scale exponentially. Few qubits can process tens of billions of calculations, once processing speeds are realized. By 2025, quantum systems will excel at simulation, optimization, AI applications, and prime factorization.

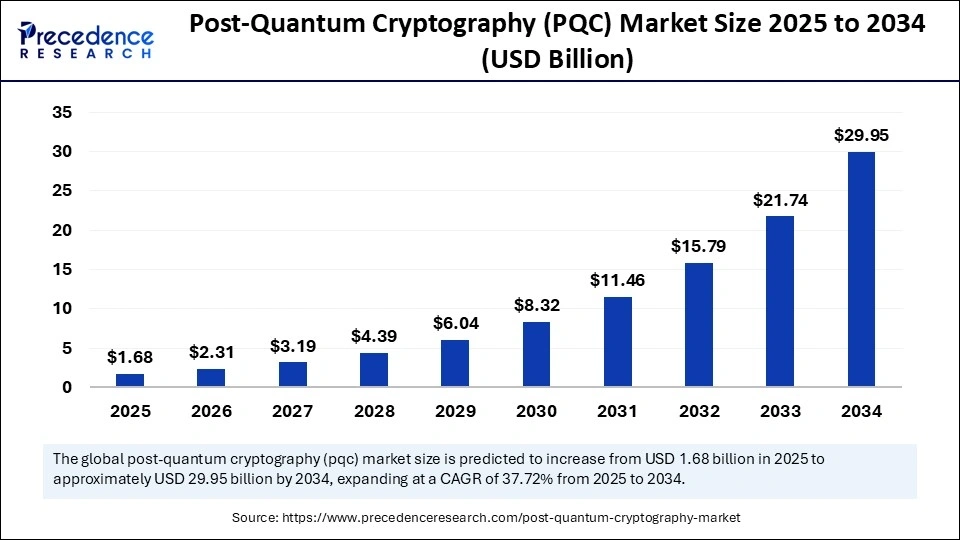

Quantum encryption risks

The exponential processing power of quantum computing presents significant cybersecurity challenges. Currently secure cryptographic systems could become obsolete as Shor’s algorithm could theoretically factor large numbers exponentially faster than classical computers, dramatically breaking RSA encryption. NSA research indicates quantum attacks could render existing digital signatures useless by the 2030s, with adversaries already engaged in “harvest now, decrypt later” strategies, collecting data to exploit when quantum decryption capabilities mature.

Post-quantum cryptography

Organizations must prepare for the post-quantum era through cryptographic agility. The National Institute of Standards and Technology (NIST) has standardized algorithms designed to resist quantum attacks, with standards expected by 2024 and widespread adoption required by 2025. Enterprises must transition to quantum-resistant protocols to ensure future-proof cybersecurity. Research suggests global organizations need 5–10 years to fully implement new cryptographic standards.

5. Digital Trust and Cybersecurity

Digital trust is being recognized as a cornerstone of technology trends 2025, with organizations implementing advanced governance frameworks to counter escalating threats in an increasingly sophisticated cyber landscape.

Cybersecurity frameworks

The NIST Cybersecurity Framework leads industry adoption, ranked most reliable by 65% of surveyed CISOs. Adoption of frameworks like ISO 27001, CIS Controls (v8), and the EU Cybersecurity Act is expanding. Gartner predicts organizations investing in cybersecurity resilience will reduce breach costs by 40% by 2026.

AI-powered cybersecurity

AI tools already automate 70% of security operations. By 2025, autonomous AI will detect and neutralize over 90% of routine attacks, freeing human experts for complex incidents. AI-driven behavioral analytics are also establishing baselines for normal activity, flagging deviations, and proactively detecting insider threats.